Artificial intelligence (AI) technology continues to evolve, for better or for worse. AI can benefit people who rely on automating routine and cumbersome tasks. However, AI can potentially be used for increasingly sophisticated phone and chatbot scams.

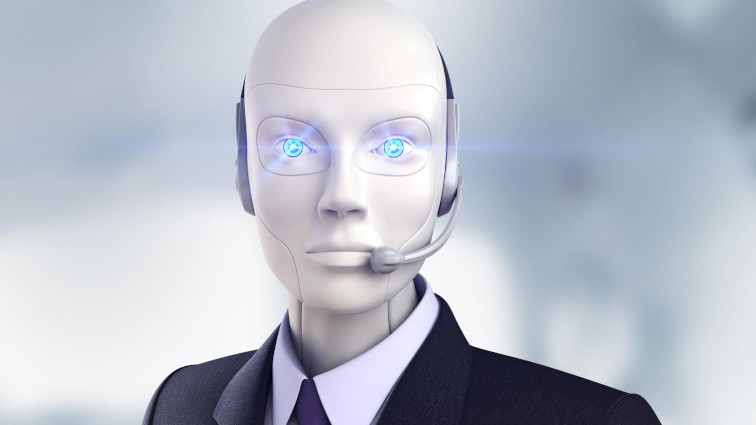

Speech AI is the use of messaging apps, speech assistants and chatbots to automate communication and create personalized customer experiences. Hundreds of millions of people use social media messaging platforms every day to connect with their friends and family.

Meanwhile, they also regularly communicate with speech assistants like Alexa Amazon and Google Home. Speech AI opens up new possibilities for automating everyday tasks. As a result, messaging and speech programs are rapidly replacing traditional web and mobile applications and becoming new platforms for interactive conversations.

Nearly three quarters of people (73%) are unlikely to trust speech AI to answer simple calls or emails for them, but experts say that hesitation will change as technology becomes more prevalent despite security risks.

Speech AI can be inaccurate or hacked

Since humans use digital assistants and AI conversational interfaces that enable real-time dialogue with machines, there are several safety aspects to consider. For example, inaccurate speech recognition can lead to sending incorrect messages to the wrong people in your contact list. This happened to a couple in Portland, Oregon, in 2018, who learned that their Alexa had sent tapes of their conversations to the husband’s colleague, despite the fact that they had not asked to record or send messages.

Also, hackers can take over control of digital assistants. This would allow a hacker to eavesdrop on private or confidential conversations, which could lead to identity theft or fraud.